After five years of building automations in Home Assistant, I finally pulled the trigger on something I’d been contemplating for months: completely replacing my Amazon Alexa ecosystem with Home Assistant’s Voice Preview Edition. Recent privacy changes from Amazon were the final straw, but what I discovered during this migration was both more challenging and more technically satisfying than I anticipated.

Bottom line up front: Home Assistant Voice Preview Edition delivers on its privacy promises and offers unprecedented customization, but the migration requires significant technical investment and realistic expectations about current limitations.

The motivation: Privacy

Amazon’s recent privacy policy changes around voice data collection finally pushed me over the edge. The idea of having always-on recording devices throughout my home, feeding data to advertising algorithms, became increasingly uncomfortable. Home Assistant’s Voice Preview Edition promised local processing, open-source transparency, and complete data ownership, exactly what I was looking for. [Home Assistant]

The $59 device features professional-grade hardware with dual microphones, XMOS audio processing, and an ESP32-S3 chip that can handle local wake word detection. [The Pi Hut] More importantly, it includes a physical mute switch that actually cuts power to the microphones.

Hardware setup: Surprisingly smooth initial experience

The physical setup proved surprisingly straightforward. The Voice Preview Edition connected to my existing Home Assistant instance within minutes, and the initial device registration worked flawlessly. The build quality impressed me, the audio processing hardware rivals devices costing significantly more, and the LED feedback ring provides clear visual status indicators. [Home Assistant]

However, I quickly discovered that “working” and “working well” are very different things when it comes to voice assistants. The default wake word detection had maybe a 50% success rate from across the room, and voice recognition accuracy felt inconsistent at best. Alexa was really good at picking up my voice when the TV was on, or if I was listening to a Podcast. Home Assistant Voice struggles in this department.

“Okay Nabu” proves problematic

Here’s where we hit our first major adoption hurdle. My partner has been struggling terribly with remembering the wake word “Okay Nabu.” Honestly, I don’t blame her, it’s not exactly intuitive. We’d been using “OK Computer” for years with our previous setup, and breaking that muscle memory has proven nearly impossible.

“I keep saying ‘OK Computer’ and nothing happens,” she told me last week, clearly frustrated. “Why can’t we just use something normal?”

The system supports three wake words: “Okay Nabu,” “Hey Jarvis,” and “Hey Mycroft,” but none feel natural after years of established patterns. [Home Assistant Community] I know custom wake words are on the roadmap, but I wish they were available now. This seemingly small issue has become one of the biggest barriers to family adoption.

The effective detection range compounds the problem. Unlike our old setup that worked reliably from across large rooms, the Voice Preview Edition often requires being within 1-2 meters and speaking clearly. [Geeky Gadgets] My partner finds herself walking closer to the device and raising her voice, which feels unnatural compared to casual voice interactions we’d grown accustomed to.

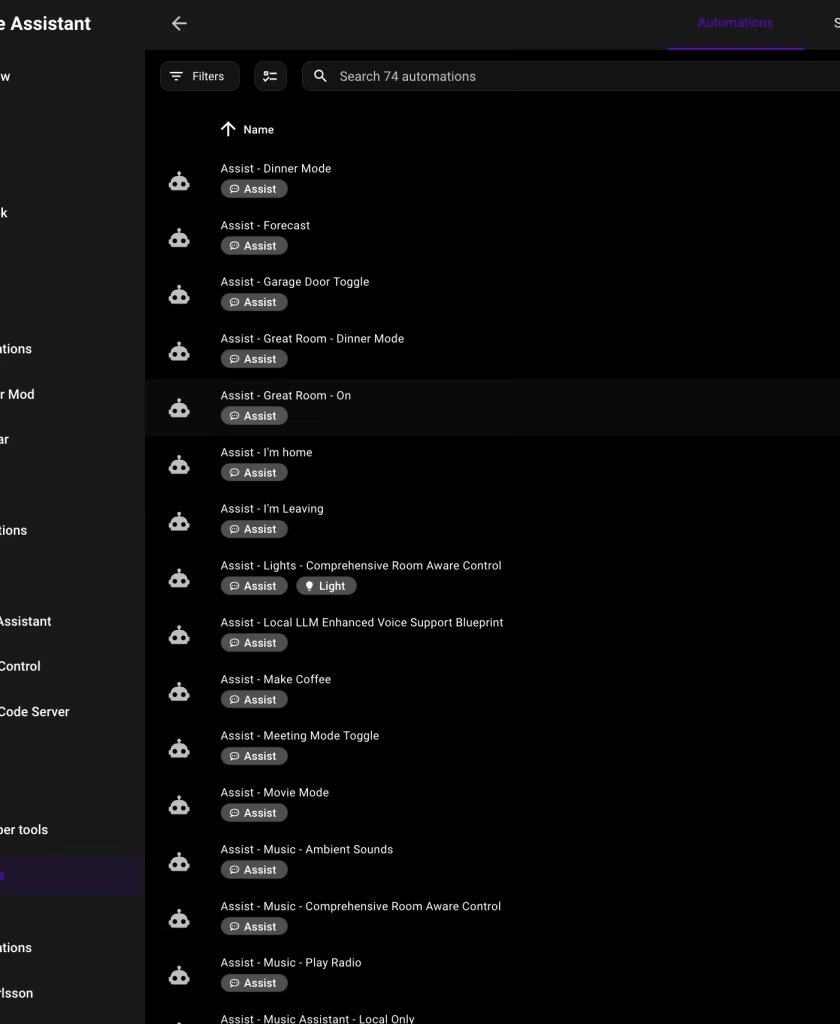

Rebuilding every automation from scratch

This is where my expectations collided with reality. I naively assumed that migrating from Alexa would involve some configuration tweaking and maybe updating a few device names. especially since I already used Home Assistant scripts for all of my Alexa automation. Instead, I found myself rebuilding every single voice command as a custom Home Assistant automation.

“Can’t you just copy the settings from Alexa?” my partner asked during week two of my automation development marathon. The answer, unfortunately, was no. Alexa’s natural language processing had spoiled us both. Commands like “turn on the living room lights” or “play some jazz music” worked reliably with minimal setup. Home Assistant’s voice system, by contrast, requires explicit automation creation for every interaction pattern you want to support.

Here’s an example of what I had to build for basic light control. This automation handles dozens of different voice commands for a single room:

alias: Assist - Lights - Comprehensive Room Aware Control

description: >

Controls lights in the room with voice commands for turning on/off, dimming,

brightening, and activating reading, nightlight, vaporwave, and red alert scenes.

triggers:

- command:

- turn off the lights

- lights off

- kill the lights

- shut off the lights

- power down the lights

- cut the lights

- lights out

- switch off the lights

- darken the room

- go dark

# ... continues for 40+ variations

trigger: conversation

actions:

- variables:

device_area: "{{ area_id(trigger.device_id) }}"

lights_in_area: |

{{ expand(area_entities(device_area))

| selectattr('domain','equalto','light')

| map(attribute='entity_id')

| reject('equalto', 'light.elgato_key_light')

| reject('equalto', 'light.elgato_ring_light')

| list }}

What started as a weekend project turned into weeks of automation development. Every room needed its own variation, every device type required custom logic, and every family member’s preferred phrasing had to be anticipated and programmed.

“Are you still working on that voice thing?” became a running joke in our household. The answer was always yes.

The intensive automation development process forced me to architect better systems than I’d ever built with Alexa. Instead of relying on Amazon’s black-box natural language processing, I created variable-driven templates that scale across multiple rooms and device types. [Home Assistant]

The automation above dynamically detects which room the voice command originated from and targets only the lights in that area. This level of context awareness was impossible to achieve reliably with Alexa’s more limited automation capabilities. I developed similar patterns for music control, calendar queries, and climate control. Each automation became a reusable template that could be applied to new rooms or device types with minimal modification.

The Home Assistant community has developed sophisticated blueprints for common voice automation patterns that provide excellent starting points. [Home Assistant Community] These blueprints saved me probably 20+ hours of development time once I discovered them.

ChatGPT integration: Easier than expected

One of the most pleasant surprises was how straightforward the ChatGPT integration proved to be. Home Assistant’s OpenAI Conversation integration requires only an API key and basic configuration. [Home Assistant] The setup took maybe 15 minutes, and suddenly my voice assistant could handle general knowledge questions, creative tasks, and complex reasoning that the default local processing couldn’t manage.

“Wait, it can actually answer questions now?” my partner asked when I demonstrated asking about the weather forecast and getting a detailed response. The difference was night and day compared to the limited local processing capabilities.

I also experimented with connecting my local Ollama LLM server, which worked well for privacy-sensitive queries but introduced noticeable latency. [Peyanski] The hybrid approach, using local processing for home automation and ChatGPT for everything else, proved most practical.

The streaming response capability impressed me most. Unlike Alexa’s sometimes clunky responses, ChatGPT integration provides natural, conversational answers that feel genuinely intelligent. The cost remains reasonable for typical household usage. Expect around $2-5 monthly for moderate use with GPT-3.5-turbo.

Audio quality reality check: Down the rabbit hole of self-hosted music

Here’s where my technical enthusiasm hit another wall. The Voice Preview Edition’s audio output quality was, frankly, terrible. The built-in speaker works fine for system notifications, but any serious audio playback, music, podcast responses, or extended ChatGPT answers, sounded tinny and unclear.

“Why does it sound like it’s coming from a tin can?” my partner asked during one of our first music tests. She wasn’t wrong.

This forced me down an unexpected rabbit hole that’s become its own multi-month project. Initially, I connected external speakers via the 3.5 mm output, but that created configuration challenges around audio routing and volume control. [Smart Home Junkie]

That led me into the world of self-hosted music solutions. I’m currently experimenting with Music Assistant as the audio coordinator, Navidrome for my personal music library, and a Lyrion Squeezebox server for multi-room audio. I’ve been testing WiiM smart speakers as potential endpoints, but honestly, I haven’t landed on anything definitive yet.

“So now you’re rebuilding our entire music system too?” my partner asked when she saw me researching yet another audio protocol. The answer, unfortunately, was yes.

Each solution solves different problems but creates new ones. Music Assistant provides excellent Home Assistant integration but requires learning another interface. Navidrome handles my personal music collection beautifully but doesn’t integrate seamlessly with streaming services. The WiiM speakers sound great but add another layer of complexity to an already complex system.

Some users successfully connect Echo devices as external speakers by using the 3.5 mm input, creating an interesting hybrid where Amazon hardware plays audio from a privacy-focused system. The irony wasn’t lost on me, but I’m trying to avoid falling back on Amazon hardware after going through all this effort to escape their ecosystem.

Performance reality: Significant gaps versus commercial alternatives

Community testing shows average response times of 3.89 seconds for local processing compared to near-instantaneous responses from commercial assistants. [Geeky Gadgets] Voice recognition accuracy rates of 37% locally and 58% with cloud processing create frustrating experiences requiring exact command syntax.

“Why do I have to say ‘turn on the lights’ exactly that way?” my partner asked after several failed attempts with natural variations. The system’s rigid command structure feels primitive compared to Alexa’s more forgiving interpretation.

Wake word detection proves particularly inconsistent. The system supports three options (“Okay Nabu,” “Hey Jarvis,” “Hey Mycroft”), but effective range remains limited to 1-2 meters for reliable activation. [Home Assistant Community] Commercial assistants work reliably from across large rooms, while the Voice Preview Edition often requires moving closer or raising your voice.

Language support varies dramatically. Local processing works best with American English and Spanish, while other languages show 40%+ word error rates. [Home Assistant] Regional accents significantly impact recognition accuracy, with non-American English dialects reporting particular difficulties.

Family acceptance: The ultimate test

The technical challenges were manageable, but family acceptance proved the real measurement of success. My partner’s tolerance for technological experimentation has limits, and voice assistants that require multiple attempts or specific phrasing quickly become frustrating.

“I just want to turn on the lights without thinking about it,” she explained after a particularly frustrating evening of failed commands.

Interestingly, we developed different usage patterns than we had with Alexa. Instead of casual queries throughout the day, voice commands became more intentional and focused on home automation rather than general assistance. The system works best for users who treat it as a smart home interface rather than a general-purpose assistant. That being said the ChatGPT interface makes this more forgiving.

Training family members on effective phrasing became essential. Commands like “turn on the living room lights” work reliably, while natural variations like “brighten up the living room” require specific automation creation. [Home Assistant Community] The learning curve is steep, but the payoff is much more predictable behavior than Alexa’s sometimes mysterious interpretation failures.

Advanced automation patterns: Where the system shines

Once past the initial setup challenges, the advanced automation capabilities began justifying the migration effort. Context-aware automations that respond based on time of day, room occupancy, or current home state enable sophisticated interactions impossible with commercial assistants. [Home Assistant]

For example, my calendar integration automation dynamically handles queries about “today,” “tomorrow,” or specific days of the week:

- variables:

delta: >-

{{ 1 if next_day and part_day else daily_mapping | selectattr('phrases',

'contains', phrase) | map(attribute='delta') | list | first | default(0) }}

start_time: >-

{{ (daily_mapping + day_part_mapping) | selectattr('phrases',

'contains', phrase) | map(attribute='start') | list | first }}

This level of dynamic template processing enables natural language interactions that adapt to context while remaining completely local and private.

“OK, that’s actually pretty cool,” my partner admitted when I demonstrated asking “what’s on my calendar tomorrow morning” and getting a contextually relevant response. These moments of technical elegance help justify the migration complexity.

Current limitations justify preview designation

The “Preview” designation proves accurate when examining current limitations. GitHub issues reveal ongoing challenges with wake word detection failures, audio playback problems, TLS certificate issues, and response interruptions. [GitHub] Community reports indicate 10-90% failure rates for voice announcements depending on configuration.

Setup complexity remains prohibitive for mainstream users. Installation requires existing Home Assistant knowledge, complex networking configuration, and extensive troubleshooting. [Swyx] The system cannot answer general knowledge questions without AI integration, lacks robust music streaming support, and provides no equivalent to Alexa’s vast skill ecosystem.

Feature gaps prevent direct commercial assistant replacement for most users. Basic functions like weather reporting require manual automation creation, music control needs exact phrasing, and third-party service integration requires custom development.

The verdict: Technical success, mainstream challenges

After several months of daily use, the migration succeeded for my specific use case but wouldn’t work for most households. The system excels as a privacy-focused smart home control interface for users willing to invest significant setup time and accept current limitations.

“It’s gotten better,” my partner acknowledged recently, “but I still miss just being able to ask questions about random stuff.” That captures the trade-off perfectly—we gained privacy and customization but lost the casual, conversational assistant experience that made voice control feel magical.

What works excellently: Privacy implementation is comprehensive with local processing options and open-source transparency. [Michael Leen] Customization possibilities far exceed commercial alternatives. Home automation control rivals or exceeds Alexa’s capabilities once properly configured.

What needs improvement: Voice recognition accuracy requires substantial development. Setup complexity prevents mainstream adoption. Missing features like general knowledge queries and robust music integration limit practical utility.

Who should consider this migration: Technical users who prioritize privacy over convenience, existing Home Assistant power users comfortable with YAML automation development, and households willing to accept reduced functionality for complete data ownership. [Smart Home Solver]

Who should wait: Families expecting plug-and-play Alexa replacement, users without existing Home Assistant experience, or anyone requiring reliable voice assistant functionality for daily tasks.

The Home Assistant Voice Preview Edition delivers on its promise as an open-source, privacy-focused voice assistant foundation. However, the “Preview” designation accurately reflects its current state—promising technology that needs substantial development before reaching consumer readiness. For the right user with appropriate expectations, it provides compelling benefits unavailable elsewhere in the voice assistant market.